Beginners introduction to Artificial Intelligence (AI) and Machine Learning (ML)

What problems Artificial Intelligence (AI) can solve today. What tools to use. How to integrate machine learning (ML) in your websites and apps.

⚠️ = extra important resources for beginners.

- My own AI/ML resources: https://www.tomsoderlund.com/ai/ai-resources

- Tweet: “Ok, I feel I need to learn AI/ML now.”

Thank you to @spimescape for mentoring me!

Introduction

I’m bullish about Artificial Intelligence (AI).

I’ve lived through three computing revolutions: the home PC, internet, and smartphones. I firmly believe that AI/ML is a 4th revolution.

I firmly believe that AI/ML is a 4th computing revolution

AI/ML is a new computing paradigm shift. Ignoring AI in 2022 is like ignoring the iPhone in 2010.

It requires a new way of thinking and approaching problems. My background as an entrepreneur and a software developer is not completely helpful; some things need to be unlearned and relearned.

AI is not magic

The more I learn about artificial intelligence, the less magical it seems. The Singularity is far way, artificial general intelligence (AGI) is nowhere near.

Instead, machine learning is all about math, identifying/generating patterns.

The more I learn about artificial intelligence, the less magical it seems

But at the same time it is magical. Example: making a blurry image sharp is creating information out of nothing. Tasks that previously required impossible number of man hours are now very feasible. AI will change how we work.

“AI” or “ML”?

What’s the difference between Artificial Intelligence (AI) vs. Machine Learning (ML)? Today AI and ML refers to similar things, but AI is more used by media and outsiders, whereas ML is the more correct term used by people who research and build the technology.

More formally:

- Artificial Intelligence refers to the general ability of computers to emulate human thought and perform tasks in real-world environments.

- Machine Learning refers to the technologies that enable systems to identify patterns, make decisions, and improve themselves through experience.

Use cases for AI/ML

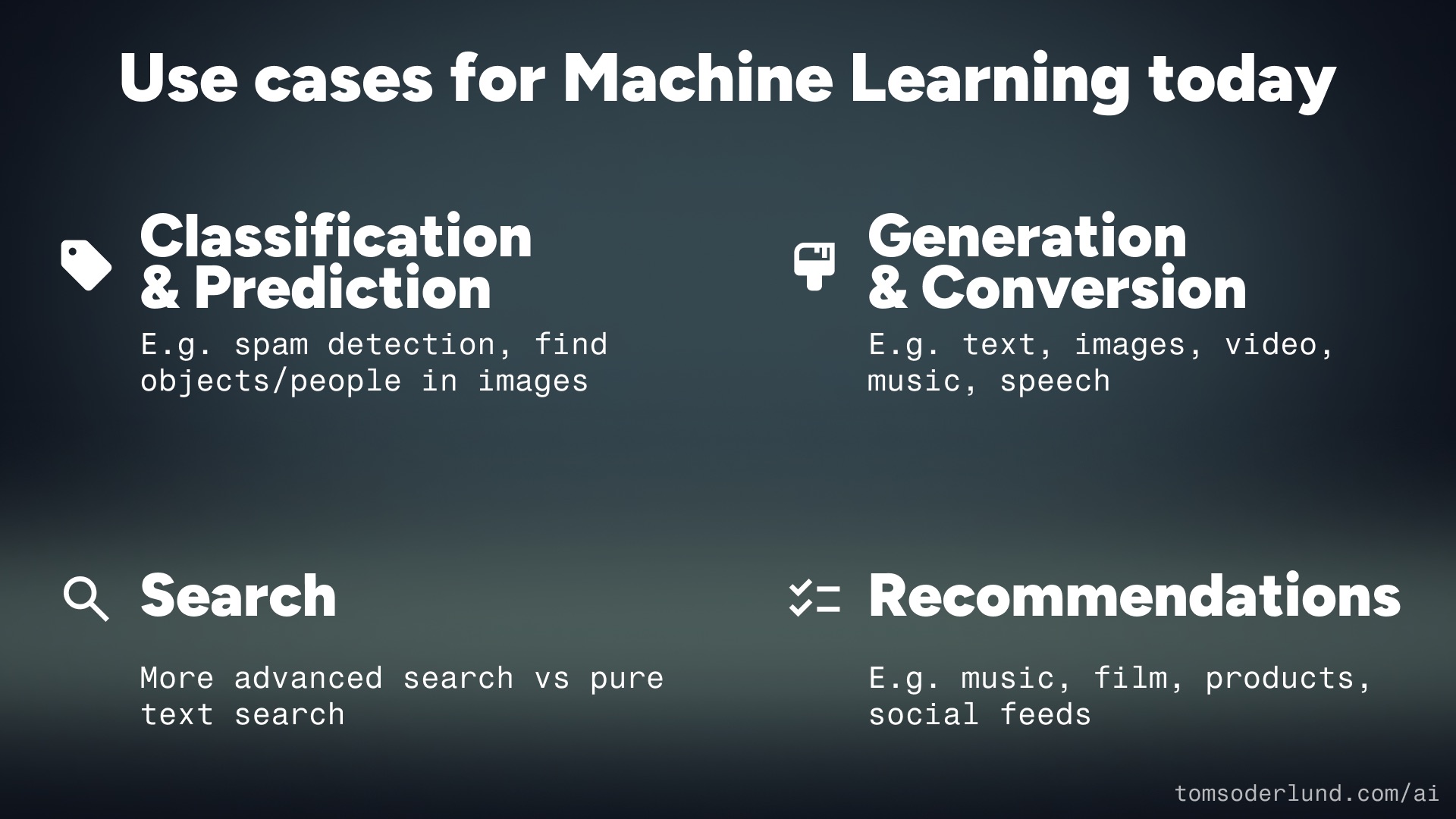

Broadly speaking, we today use Machine Learning (ML) for:

- Classification & Prediction (e.g. spam detection, find objects/people in images)

- Generation & Conversion (e.g. text, images, video, audio, speech)

- Search (more advanced search vs pure text search)

- Recommendations (e.g. music, film, products, social feeds)

Learning resources

⚠️ Two great free courses for ML beginners: Google’s (theoretical) and FastAI’s (practical).

- Google ML Foundational Courses

- ⚠️ FastAI: “Practical Deep Learning for Coders”

- YouTube video lessons

- Book: “Deep Learning for Coders with FastAI and PyTorch: AI Applications Without a PhD”: free on GitHub or on Amazon

- Kaggle course “Intro to Machine Learning”

- O’Reilly:

- Courses: Data/ML engineer

- Book: “Python Machine Learning”

- Playlist

- VS Code: “Data Science in VS Code tutorial”

- W3schools Python course

- “Build your AI Product MVP in less than 24 hours”

People

Tools

- Programming language

- ⚠️ Notebook software

- ⚠️ ML code examples

- Frameworks & libraries

- Models

- Datasets

Programming language

Python is the main programming language for AI/ML.

⚠️ Notebook software

For writing/documenting/sharing ML code – essentially word processors with embedded (Python) code blocks. Uses .ipynb files, which are JSON structures containing Python code blocks and Markdown text blocks:

- Jupyter Notebook: the original (see below)

- VS Code has built in Jupyter support

- Google Colab: work with files on Google Drive and GitHub

- Kaggle

- Gradio for building UI’s inside notebooks

- IPython: interactive Python and Jupyter.

- REPL: interactive Python session. Read, Evaluate, Print and Loop.

Jupyter Notebook

- Press Tab to get autocompletion suggestions

- Inside the parentheses of a function, pressing Shift + Tab will display signature and description of the function

?[function]to get help on function:?verify_images, or??verify_imagesfor full source codedoc([function])to get documentation page%debug: inspect every variable

⚠️ ML code examples

- HuggingFace Spaces: discover ML apps

- Kaggle Code: explore, run, share ML code

Frameworks & libraries

- PyTorch – open source machine learning framework

- TensorFlow – Google

- Keras for deep learning

- JAX

- FastAI

PyTorch

Libraries:

- torch: main library

- torchaudio: sound

- torchtext: text/NLP

- torchvision: images and video

- torcharrow: data preprocessing in deep learning

- TorchData: data loading primitives for easily constructing flexible and performant data pipelines

- TorchRec: recommendations

- TorchServe: server

- TorchX: job launcher for PyTorch applications

Models

Collections of models:

- HuggingFace: collection of models, “GitHub for AI models”

- EleutherAI: creator of open source AI models

- OpenAI: creator of private/closed source – GPT-3, DALL·E

Some models:

- Text-to-Text:

- GPT-3: OpenAI

- GPT-Neo 1.3B: a transformer model designed using EleutherAI’s replication of the GPT-3 architecture.

- GPT-J 6B: a transformer model trained using Ben Wang's Mesh Transformer JAX.

- Text-to-Image:

- DALL·E: OpenAI

- Midjourney

- Stable Diffusion: Stability AI

- Imagen: Google

- Parti: Google

- Text-to-Video:

- Imagen Video: Google

- Phenaki: Google

- Make-a-Video: Meta

Datasets

- Kaggle Datasets

- Google Dataset Search

- Google Scholar

- UCI Machine Learning Repository

- VisualData

- CMU Libraries

- The Big Bad NLP Database

- Lists of datasets:

Machine Learning Theory

Machine learning algorithm:

Inputs + Weights → Model → Results + Loss

…then adjust Weights

⚠️ ML input data is always in number format (with decimals), even though it came from text/image/video/sound. See “Feature engineering” below.

Terminology

- Model: what does the actual predicting; a file that has been trained to recognize certain types of patterns.

- Features: model inputs,

x₁,x₂, etc. - Label: model output,

y, what we want to predict. - Example: one piece of data (features, labeled or unlabeled).

- Dataset: a whole collection of examples. Subsets could be used for training, validation, or testing.

- Batch: the set of examples used in one training iteration.

- Epoch: number of passes of the entire training dataset the machine learning algorithm has completed.

- Vector: an array of numbers.

- Matrix: a 2D array of numbers, rows × columns, e.g. 150×4.

- Tensor: a specialized data structure that are very similar to arrays and matrices. A tensor interacts with other entities in a system and changes its values when other values change.

- Slope:

m, angle of the line in linear regression. - Weight:

w, the importance of a feature - Bias:

b, how well a model matches the training set. - Loss: mismatch with model, mean square error.

- Learning rate: granularity when training a model

- Gradient descent: algorithm for finding minima.

- Hyperparameters: the “knobs” that programmers tweak in machine learning algorithms.

- Deep learning: artificial neural networks with representation learning.

- Data augmentation: creating random variations of our input data so they appear different, but do not actually change the meaning of the data. E.g. image rotation and flipping.

- Generalization: a model’s ability to adapt properly to new, previously unseen data.

- Feature engineering: transform inputs so they can be used in model.

- One-hot encoding: as 1 or 0.

- Binning: put category values into “buckets”.

- Feature crosses: combine features to create synthetic features.

- Decision boundaries: a surface that separates data points belonging to different class labels.

- Regularization: reducing complexity of model (e.g. L2).

- Logistic regression: output is a probability (0.0-1.0).

Learning types

- Supervised learning: labeled data/output

- Reinforcement learning: reward signal, e.g. chess engine

- Unsupervised learning: hidden structures – clustering, dimensionality reduction

Also: feature learning/representation learning

Neural networks

Neural networks definitely shine where you have data that is not easily transformable into features – for example images used to be hard to transform into good features.

NN math, simplified: multiply, add them up, replace negatives with zeroes.

- CNN: Convolutional Neural Network, a deep learning neural network sketched for processing structured arrays of data such as portrayals.

- RNN: Recurrent Neural Network, a class of artificial neural networks where connections between nodes can create a cycle, allowing output from some nodes to affect subsequent input to the same nodes.

- Transformer: is a deep learning model that adopts the mechanism of self-attention, differentially weighting the significance of each part of the input data. It is used primarily in the fields of natural language processing (NLP) and computer vision (CV).

Text processing with Large Language Models (LLM’s)

- LLM: Large Language Model

- NER: Named Entity Recognition

Vector search and Semantic search

- Vector search: matching search key’s vectors’ proximity in vector space with other items.

- Semantic search: NLP for transforming search phrase into vectors, then vector search for matching their proximity in vector space with other items. These vectors are usually called embeddings.

- Embeddings: special type of vector that is produced as a result of running an input through an ML model - usually a neural network.

- Dense retrieval: using embeddings to search.

Deployment

There are two main use cases for deploying ML code: training and inference.

For inference, there are several options:

- Use your own cloud compute/GPU and have your backend use that.

- AWS SageMaker Inference service and ping that from your backend.

- GCP’s ML Inference service (Vertex AI) and ping that from your backend.

- HuggingFace Inference Endpoints: https://huggingface.co/inference-endpoints